The Bifurcation of the AI Cloud Compute Market

This report examines how the GPU-centric cloud market is diverging into distinct tiers of providers, each capturing value in different ways. We analyze global supply/demand trends

Introduction and Key Findings

AI compute demand is exploding, straining global data center capacity to its limits. Occupancy rates for third-party data centers sit at record highs, and overall power demand from AI workloads is surging (~97% CAGR for AI datacenter capacity 2022–2026). This report examines how the GPU-centric cloud market – the backbone of AI – is diverging into distinct tiers of providers, each capturing value (or struggling to) in different ways. We define four main categories of GPU compute sellers, analyze global supply/demand trends, and highlight a growing divide in fortunes:

Hyperscalers (AWS, Google, Microsoft, Oracle) enjoy massive internal AI workloads and booming enterprise demand, investing unprecedented sums (over $190 billion in AI infrastructure capex in 2024 and projected to spend over 325 billion in 2025) to maintain dominance.

"NeoClouds" (specialized GPU cloud upstarts like CoreWeave, Crusoe, Lancium) are growing at 400-750% YoY; triple-digit rates, leveraging better price-performance and fast provisioning to win overflow demand from hyperscalers and cost-conscious AI labs.

Marketplaces (Runpod, SFCompute, Compute Exchange, Vast.ai, etc.) aggregate spare GPUs from various owners, offering bargain prices – but they rarely land large enterprise deals and face a glut of supply (H100 rental prices crashed from $8/hr to ~$2/hr in late 2024 amid oversupply).

Bare-Metal GPU Datacenters (colos or enterprises with GPUs but no cloud software stack) often sit on underutilized hardware. Without robust orchestration platforms or large sales teams, these operators struggle to monetize their GPUs, resorting to reselling capacity via third-party marketplaces.

AI is taking over the datacenter. AI workloads now account for ~13% of global datacenter demand and are on track to hit 28% by 2027, doubling in just two years. Total demand (≈62 GW today) is set to grow 50%+ by 2027, outpacing supply and keeping markets tight. However there are only a few winners who will capitalize on this wave of AI demand. The companies that spot these trends early and act fast will have major upside.

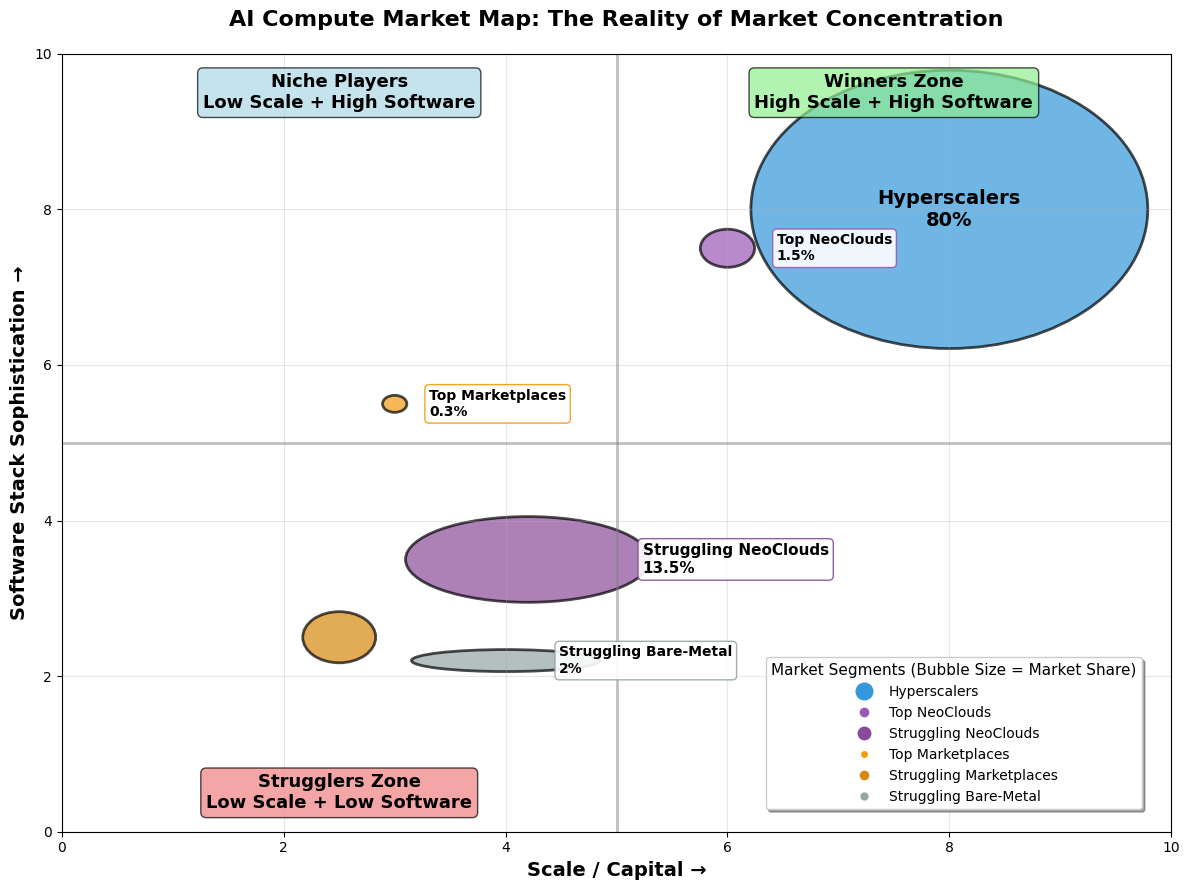

Our analysis finds a bifurcated market: Hyperscalers and some NeoClouds like coreweave have been able to capture outsized growth (fed by the AI boom and their agility), while unspecialized hosters fall behind with very low utilization. Enterprises themselves are increasingly "verticalizing" AI compute, with firms like XTX (quant trading) building huge private GPU clusters (25,000+ GPUs) to avoid cloud constraints. The result is a rapidly evolving landscape with new opportunities – and risks – for investors and buyers to navigate.

Figure: The GPU Cloud Market Matrix - Four types of sellers competing for AI compute demand

1. GPU Cloud Ecosystem: Four Types of Sellers

a. Hyperscalers: The AI Superpowers

Amazon, Microsoft, Google, and Oracle dominate global AI compute with millions of GPUs, custom silicon (TPUs, Trainium), and nearly $200B in AI infra capex in 2024. Microsoft alone bought ~485,000 GPUs, targeting 1.8M live by year-end; Meta is at ~600,000 GPUs.

But they aren't just powering external clients—they consume much of this compute themselves, training frontier models (like GPT-4-class systems), running recommendation engines, and deploying generative AI across their own products.

Public cloud AI services like Azure OpenAI and Google Vertex AI are growing fast (~20% YoY), but internal workloads remain a major driver of hyperscaler demand.

Their scale, global reach, and full-stack platforms make them the default AI backbone for enterprises. Yet cracks are emerging: slow provisioning, vendor lock-in, and opaque pricing are pushing some users toward more nimble alternatives.

b. NeoClouds: Specialized GPU Clouds for AI/HPC:

CoreWeave, Crusoe, Lambda Labs, and Lancium represent a fast-rising class of specialized GPU cloud providers built for AI and HPC. They deploy state-of-the-art Nvidia GPUs(B200s/B300s, H200s etc) with robust software stacks (Kubernetes, container orchestration, ML frameworks) that rival Big Cloud—but at 50-70% lower prices and with faster provisioning. CoreWeave alone scaled from ~53,000 to 250,000+ GPUs in 2024, hitting $1.9B in revenue (up 730% YoY) and growing ~5× again in Q1 2025. A $7.5B Nvidia pre-buy gave it early access to H200s and let it capture hyperscaler overflow.

NeoClouds win by pairing hardware at scale with a developer-first experience: intuitive UX, fast onboarding, first movers advantage to SOTA hardware and real-time support via Slack/Discord.

But not all survive. Those lacking a software stack or support layer are struggling—GPUs alone aren't enough. With hardware costs high and capital tight, the gap between winners and distressed players is widening fast.

The Neoclouds lack the hyperscalers' economies of scale in data center operation — their unit costs for hardware, power, and networking are a bit higher — so margins are thin. NeoClouds run on razor-thin economics: higher unit costs and heavy debt mean they survive only if utilization stays >50% and revenue isn't dominated by a single client. Yet investors still assign multi-billion valuations because agile players are grabbing hyperscaler-sized wins—witness Crusoe's 200 MW, 100k-GPU Texas campus pre-leased to a Fortune 100 customer. The eventual winners will be those that lock in abundant power at the right locations and a diversified customer mix; over-leveraged, single-tenant NeoClouds risk a hard fall.

c. Marketplaces: GPU Compute Brokers

Platforms like Vast.ai, RunPod, and SFCompute aggregate spare GPU capacity—from miners, hobbyists, and small data centers—and rent it out usually to smaller buyers or individual researchers (often 70-80% cheaper than hyperscalers). Some try acting as an external salesforce to land larger clients but these are harder to come by.

They thrived during the 2023 GPU crunch but mostly serve small, price-sensitive users, not enterprises; fragmented supply and weak SLAs make them ill-suited for mission-critical AI. Prices have since collapsed (e.g. H100s < $2/hr), leaving many sites recycling the same excess inventory while marketplaces compete for the same customers. A lack of clear SLAs, support, transparency on locations of the hardware etc makes it hard for larger buyers to trust most marketplaces.

The marketplaces that survive will be those that bundle easy-to-use software, pooled purchasing power, and community support—effectively turning thousands of micro-buyers into one "virtual enterprise" big enough to matter.

d. Bare-Metal GPU Datacenters (No Platform):

Some colos, telcos, and ex-crypto miners crammed racks with GPUs but never built a cloud-grade software layer. Selling raw "bare-metal" compute—no orchestration, support, or developer tools—forces customers to bring their own stack, so enterprise and larger AI buyers look elsewhere. Many of these operators now dump surplus GPUs onto marketplaces, but rock-bottom prices and sporadic demand rarely deliver the revenue or utilization they need. Worse, several run in facilities that fall short of Tier III reliability, further limiting enterprise appeal. Unless they add a turnkey software layer or partner with a NeoCloud, they'll remain low-margin "arms suppliers" in an AI boom that increasingly rewards full-service platforms.

2. Macro Trends Shaping the Datacenter Market

The AI infrastructure market is undergoing rapid transformation—driven not just by raw demand, but by where and how that demand manifests. Below are the seven defining trends reshaping the landscape:

a. Inference Tsunami

While training gets the headlines, the real compute demand is in inference. Once a model is deployed, over 90% of its lifetime compute is spent on test-time use—whether it's powering chatbots, search ranking, ad targeting, or robotics. With the rise of autonomous agents, copilots, and smart devices, inference FLOPs are projected to outpace training by 15-20× within a few years. The result: clouds optimized for low-latency inference, high memory bandwidth, and fast autoscaling will win the next wave of spend.

b. Latency Critical workloads is the fastest growing segment

AI inference requires millisecond response times. That makes location-critical—power alone isn't enough. Texas, for example, has cheap power but many sites sit 15-20 ms from major metros, too slow for real-time AI. Fiber-rich, metro-adjacent data centers (e.g., Northern Virginia, Santa Clara, North Jersey, North Carolina, Ohio) now command premiums. The new standard is: power + latency + connectivity.

c. Enterprise Self-Build Surge

NeoClouds expected large enterprise demand, but many top AI buyers are going direct-to-hardware. Firms like XTX Markets and JPMorgan are building their own private GPU clusters for security, control, and cost. Enterprises prefer to have their own customized hardware giving them more bargaining power against the hyperscalers, futureproof themselves to macroeconomic conditions, and don't have to worry about sensitive IP sitting on others servers. This trend is stripping away the most stable demand from NeoClouds, who now face a tougher fight for customers.

d. One-Buyer Risk

A single anchor tenant can drive massive short-term growth—but also existential risk. Some NeoClouds have already been crippled by the exit of a major client, left holding financed GPUs and long-term leases. Investors and lenders now demand customer diversification and take-or-pay commitments to de-risk the model.

e. Cloud is Still a Buyer's Market

GPU prices have collapsed—H100s dropped from >$8/hr to <$2/hr in 12 months—as supply caught up with demand. Buyers have choices. In this crowded landscape, only clouds with real differentiation—sovereign compliance, better UX, integrated MLOps, or unbeatable economics—will stand out.

f. The AI Site Playbook: Five Traits That Signal Long-Term Value

A truly premium AI datacenter must have all five:

>100 MW of reliable power

Dual, long-haul fiber connectivity

Abundant water or advanced cooling systems

<15 ms latency to a major metro

Permitting/tax clarity

Fewer than 5% of Advertised global "Datacenter Sites" check all five boxes. Hyperscalers and smart infra funds are buying them now—before prices spike.

g. Sovereign AI is Inflating Regional Demand

Governments are now major compute buyers. Europe has launched a €20 billion "AI Gigafactory" plan; Gulf states and India are buying hundreds of thousands of GPUs to build national AI capacity. These sovereign projects often pay a premium, distorting regional markets and squeezing commercial buyers in those zones.

3. Market Bifurcation: Winners and Strugglers

The AI compute boom isn't lifting all boats—it's separating winners from the rest.

a. Hyperscalers: Dominant and Self-Sustaining

Hyperscalers (AWS, Azure, Google, Meta) remain the backbone of AI compute, powered by insatiable internal workloads and growing enterprise demand. Over 80% of global AI capacity still runs through them. Their scale enables custom chips, aggressive pricing, and the ability to absorb excess GPU supply internally. They're not just renting compute—they're training the biggest models in-house, justifying massive capex no one else can match. The rich are getting richer.

b. NeoClouds: Fast-Growing but High-Risk

NeoClouds (CoreWeave, Crusoe, Lancium) filled gaps the hyperscalers left—faster provisioning, lower prices, better UX. They've grown 10× in revenue, winning startups and overflow from even Meta and OpenAI. But they're heavily debt-financed and vulnerable to one-buyer risk. Many bet on big enterprise demand that never came, while hyperscalers are now pushing back with lower prices and reserved capacity. NeoClouds are winning—for now—but running hot with thin margins and no room for error.

c. Marketplaces: Clearing Houses, Not Cloud Platforms

GPU marketplaces (Vast.ai, RunPod) serve the long tail—hobbyists and indie devs, not Fortune 500s. Prices fell from $8/hr to <$2/hr, signaling oversupply. Marketplaces are useful for absorbing idle capacity, but lack trust, support, and SLAs to win large buyers. Without differentiation or scale, they're stuck in margin compression and buyer churn.

d. Bare-Metal Datacenters: Stranded Without Software

Owning GPUs isn't enough—AI buyers want full-stack solutions. Many bare-metal datacenters lack orchestration, developer tools, or proper Tier III facilities. They're forced to dump capacity on marketplaces, earning little. Some may survive by leasing wholesale to hyperscalers or partnering with NeoClouds, but most face underutilization and consolidation risk.

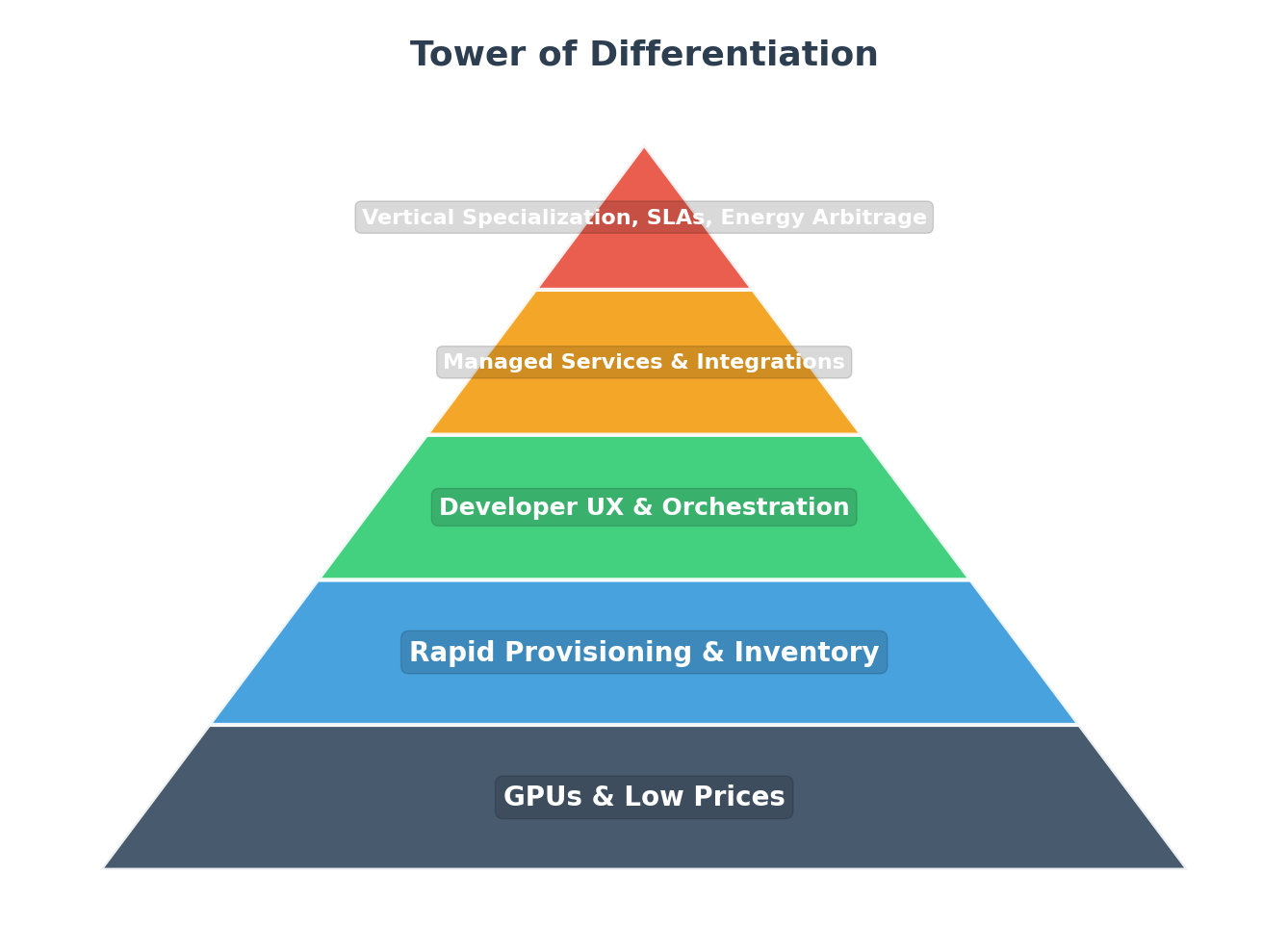

The bottom line is that the market is bifurcating. Winners deliver speed, support, software, and strategic positioning. Strugglers are left with idle hardware and no value-add. The new currency is integration and differentiation—not just raw compute.

Figure: Market positioning - Leaders driving growth vs. players facing challenges in the evolving GPU cloud landscape

4. Our Strategic Recommendations

Here's our advice for NeoClouds, enterprises, colo operators, and investors navigating this shifting market:

a. NeoClouds: Specialize and Stabilize

Competing on price alone won't cut it. The winners will specialize by owning vertical use cases—biotech, quant finance, media rendering, robotics, etc.—and offering tailored software, SLAs, and developer tooling.

Avoid the one-buyer trap, the market has evolved and the amount of risk this strategy poses is simply too high. Build a diverse client base of smaller, consistent users with the right support stack, orchestration layer, and onboarding experience. If you're missing these, partner or license to get there faster. We would love to work with you to refine this strategy and find buyers or partners.

Figure: Tower of Differentiation - Strategic layers NeoClouds must build to compete effectively

b. Enterprise Buyers: Own vs Rent, But Optimize Either Way

If you're running 24/7 inference or training massive models, building your own cluster might make sense. We're seeing firms like XTX and JPMorgan go this route to control costs, preserve full privacy of IP, hedge against GPU rental prices ever rising back, and latency.

Not ready to own yet? You can still negotiate better contracts, pursue hybrid setups, or consider managed dedicated clusters. We help AI companies evaluate TCO, select sites, and match with the right providers—without overpaying for suboptimal infra. We can even match you with companies that offer datacenter building as a service—so you don't overpay or overbuild.

c. Colo Operators: Add Value or Get Bypassed

Hardware alone isn't enough—enterprises want more than rackspace. Without orchestration or developer tools, your GPUs will stay idle or end up listed on marketplaces with no pricing power. Instead, consider layering managed services or aligning with NeoCloud players to become part of their ecosystem. If your site has Tier III+ infra, fiber and water access, abundant power, and close proximity to a city, there's real opportunity. If not, we can help assess and reposition.

d. Investors: Follow Utilization, Not Hype

Distressed GPU owners, stranded mining sites, and underutilized colo assets are the biggest arbitrage opportunity today. But location quality (power, fiber, water, latency) is everything. If you're holding infrastructure or capital and unsure how to turn it into AI yield—we'll show you who's buying, how to convert, and where to avoid mistakes. Our deal flow and visibility can give you a head start.

5. Under-Reported Insights & Forward Outlook

As the AI infrastructure market matures, the surface story of growth and hype gives way to deeper, more structural realities. Below, we highlight the most important—yet often overlooked—forces shaping the future of NeoClouds and datacenter investment. For firms navigating these shifts, understanding where value is created (and destroyed) is essential.

a. NeoCloud Economics: 90/10 Market

The majority of NeoCloud providers are built on high-debt, high-utilization assumptions. If they fail to hit scale, their models collapse. Expect most upstarts to fail or consolidate—only the top 10% will build sustainable businesses. Understanding utilization dynamics and customer concentration early is key to avoiding stranded assets.

b. Site Scarcity, Not Power Scarcity

Power is available—but Tier III+ sites with 100+ MW, water, dual fiber, and low latency to metro hubs are scarce. Those are what everyone is chasing. Markets that some companies think are still very appealing like Texas are starting to get saturated, but new opportunities exist in overlooked regions with the right fundamentals. We've helped investors and builders identify these locations before the crowd. Site selection is where edge is made—or lost.

c. Sovereign AI: Government-Backed Cloud is Here

From UAE's 5 GW cluster to Europe's €20B AI gigafactories, sovereign-backed infrastructure is reshaping the landscape. These clouds have demand locked in and are playing long-term—making them harder to compete with, but valuable to partner with or emulate. Understanding which regions are overbuilt and which will attract sovereign tailwinds is a growing strategic advantage.

d. Inference Will Eclipse Training

Production inference is scaling faster than training—with some estimates suggesting a 15-20× delta by 2027. Hardware optimized for inference, low-latency sites near cities, and edge AI will matter more than brute force GPU clusters in the long run. Those who can support inference workloads at scale or provide the hyperscalers with the power—without overbuilding—will lead in unit economics.

e. Value is Moving Up the Stack

Raw compute is becoming commoditized. Clouds that layer software, vertical tools, or managed AI services are pulling ahead. The margin isn't in the silicon—it's in what you do with it.

f. Consolidation is Inevitable

The market is bifurcating—hyperscalers will buy distressed clouds, colos will partner or vanish, and only the most adaptive NeoClouds will survive. Investors need to underwrite not just hardware—but resilience and differentiation. We've seen early signs of both value traps and breakout winners—and can help make sense of the field.

The race to build AI infrastructure is still early—but increasingly competitive. Those who move with precision—securing the right sites, utilization models, and demand channels—will capture durable value. Those who don't will be left with idle racks and expensive mistakes. If you're building, investing, or scaling in this market and want an edge—on where to go, who to trust, or how to build—we're always happy to share what we're seeing on the ground.