What Happens to Datacenters When Smaller Models Start Solving Bigger Problems

An analysis of how the evolution towards more efficient, smaller AI models will impact datacenter infrastructure, compute demand, and the broader AI hardware ecosystem.

A few days ago, an investor who finances large-scale datacenters asked us a simple question:

"I saw something about a new model that runs on a laptop but gets state-of-the-art results on reasoning problems and puzzles. Does this change anything for us?"

That question gets to the heart of what this note is about: What does the future of AI workloads look like, and what does it mean for the datacenter ecosystem?

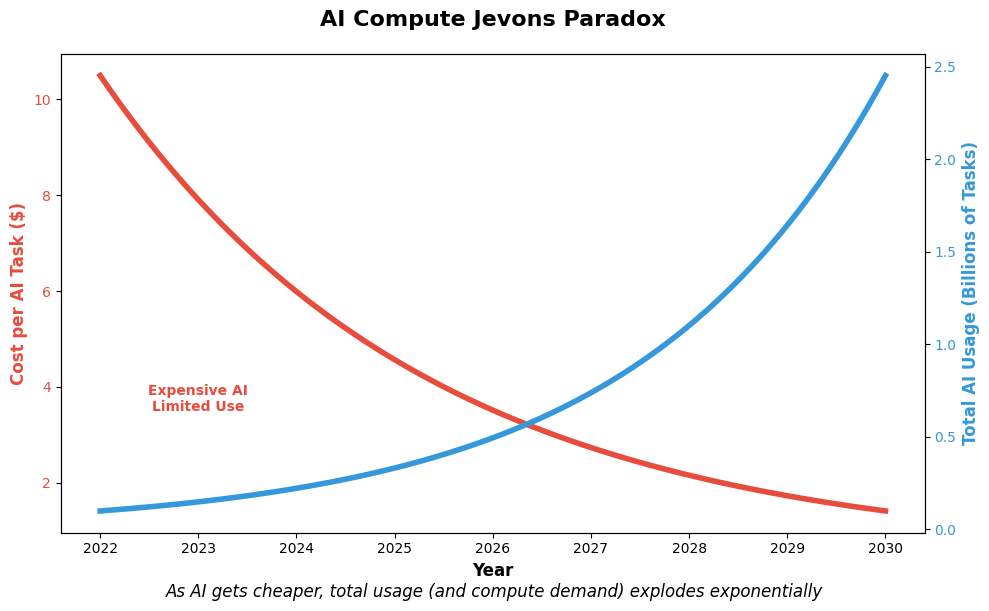

If models continue getting smaller, faster to train, and easier to run, does demand for large-scale infrastructure fall off? Or does Jevons paradox kick in—where making compute cheaper and more efficient only drives usage even higher?

Fig: As AI gets cheaper, total usage (and compute demand) explodes exponentially

Source: FPX AI

The AI industry has been built on a simple premise: bigger models yield better results. For years, the race has been to scale up: more parameters, more data, more compute. However, a paradigm shift is underway. Smaller, more efficient models are beginning to solve problems that were once the exclusive domain of their larger counterparts. This evolution raises a critical question: What happens to the massive datacenter infrastructure built to support these computational giants when the future belongs to the efficient and compact?

This transformation isn't just about model architecture. It's about fundamentally reimagining how we approach AI infrastructure, resource allocation, and the economics of artificial intelligence. As we stand at this inflection point, understanding the implications for datacenters, cloud providers, and the broader AI ecosystem becomes paramount.

The Hierarchical Reasoning Model: Rethinking Where the Compute Goes

Let's start with the model that sparked the question. The model in question is the Hierarchical Reasoning Model (HRM), introduced in a recent paper by Wang et al. (2024). It is modelled after the human brain, it's a compact, 27-million-parameter model—small enough to run on a laptop. But in a series of symbolic reasoning tasks that typically baffle even massive LLMs, HRM matches or beats them—without any pretraining, and using just a few hundred examples per task.

So what makes it different?

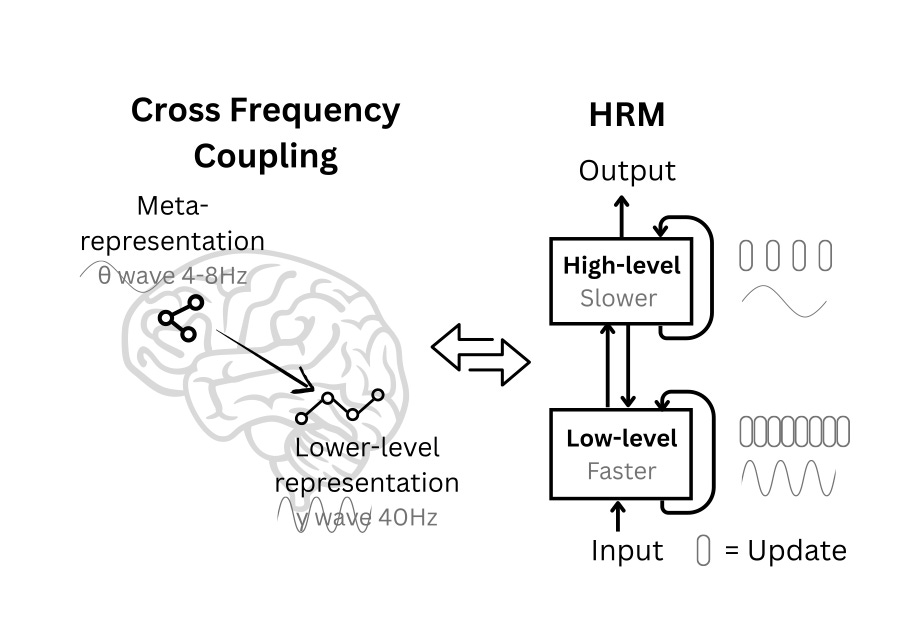

HRM abandons the typical transformer approach of processing everything in a single pass. Instead, it's built around a simple but powerful principle: not all reasoning should happen at the same speed or level of abstraction. It splits computation into two loops:

A slow, high-level module that plans and sets the context

A fast, low-level module that iterates on subproblems and refines solutions

Fig: HRM is inspired by hierarchical processing and temporal separation in the brain. It has two recurrent networks operating at different timescales to collaboratively solve tasks.

Source: Hierarchical Reasoning Model

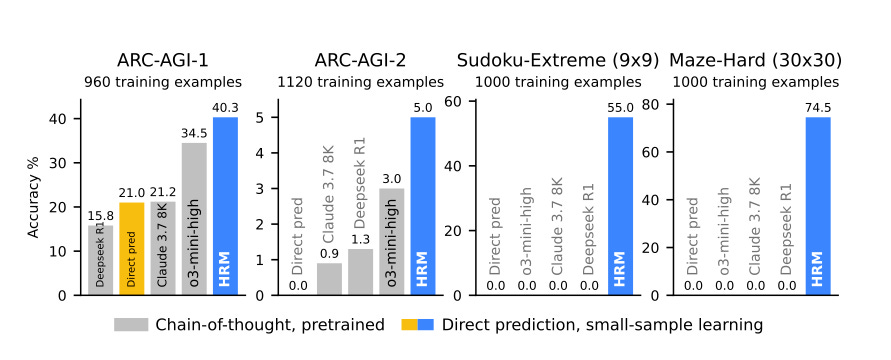

Fig: The HRM (~27M parameters) outperforms state-of-the-art chain-of-thought models on tough benchmarks like ARC-AGI, Sudoku-Extreme, and Maze-Hard—where those models failed. It was trained from scratch and solved tasks directly, without using chain-of-thought reasoning.

Source: Hierarchical Reasoning Model

These two modules interact in a loop, feeding results back and forth until the model "converges" on an answer. This mimics how humans often solve hard problems—trying something, stepping back, adjusting the plan, and trying again.

What's elegant here is that the model isn't bigger; it's deeper in time. Instead of stacking more layers, it loops smarter. This allows HRM to "think" for longer on hard problems and less on easy ones, without blowing up memory or compute.

Hardware Implications

From first principles, this matters because it reshapes how we measure model capability. Instead of more parameters or more tokens, performance can come from more internal steps, more efficiently executed. That has serious implications for hardware.

The workloads look different: less like giant matrix multiplications (that GPUs are good at), more like recurrent, latency-sensitive programs. Hardware optimized for streaming, tight memory access, or fast feedback cycles may suddenly have an edge.

HRM is far from general-purpose. It doesn't replace LLMs or handle open-ended language tasks. But it shows that reasoning—long considered the domain of massive models—can be re-architected. And if that happens at scale, the entire shape of compute demand could shift.

The bottom line is that algorithmic innovation won't stop and neither will the demand for AI workloads, the winners will be the companies that can act fast across the stack.

The Future of AI Workloads Is Hybrid—and Smaller Than You Think

If training drove the last wave of AI infrastructure buildout, inference will drive the next. And inference—especially for robotics and real-world AI applications—is increasingly moving toward smaller, more capable models that don't need hyperscale clusters to operate.

We're seeing a quiet shift. Small models are getting more complicated—architecturally deeper, more adaptive, more reasoning-capable—and they can increasingly run on edge devices, laptops, or compact datacenter instances. That makes them natural candidates for Physical AI, a term we use to describe the entire class of embodied agents, sensors, robots, and autonomous machines interacting with the physical world.

By the end of the decade, inference related to Physical AI will likely dominate total compute usage, simply because these systems will be running continuously and everywhere—from home robots to warehouse automation to autonomous industrial systems.

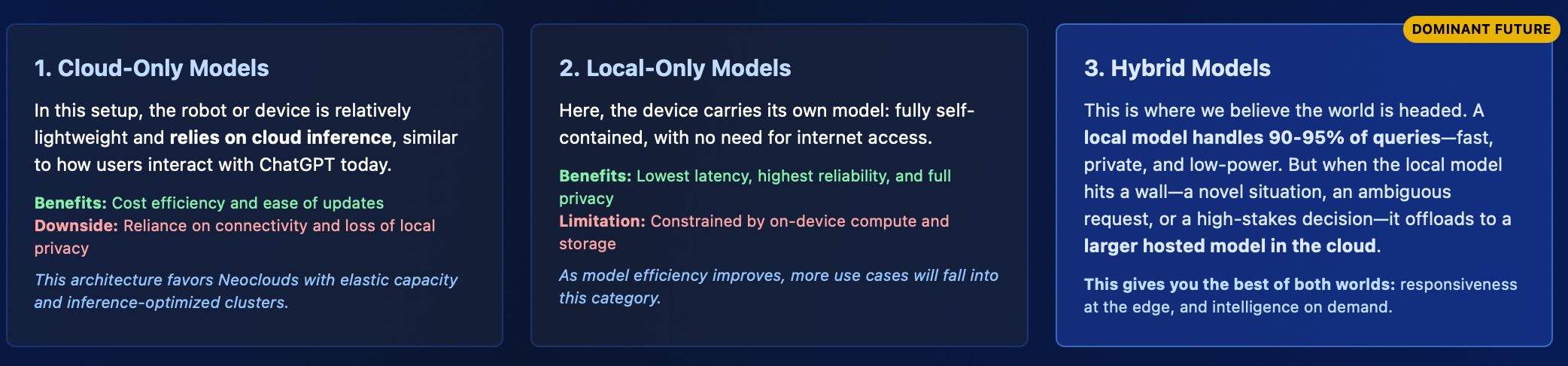

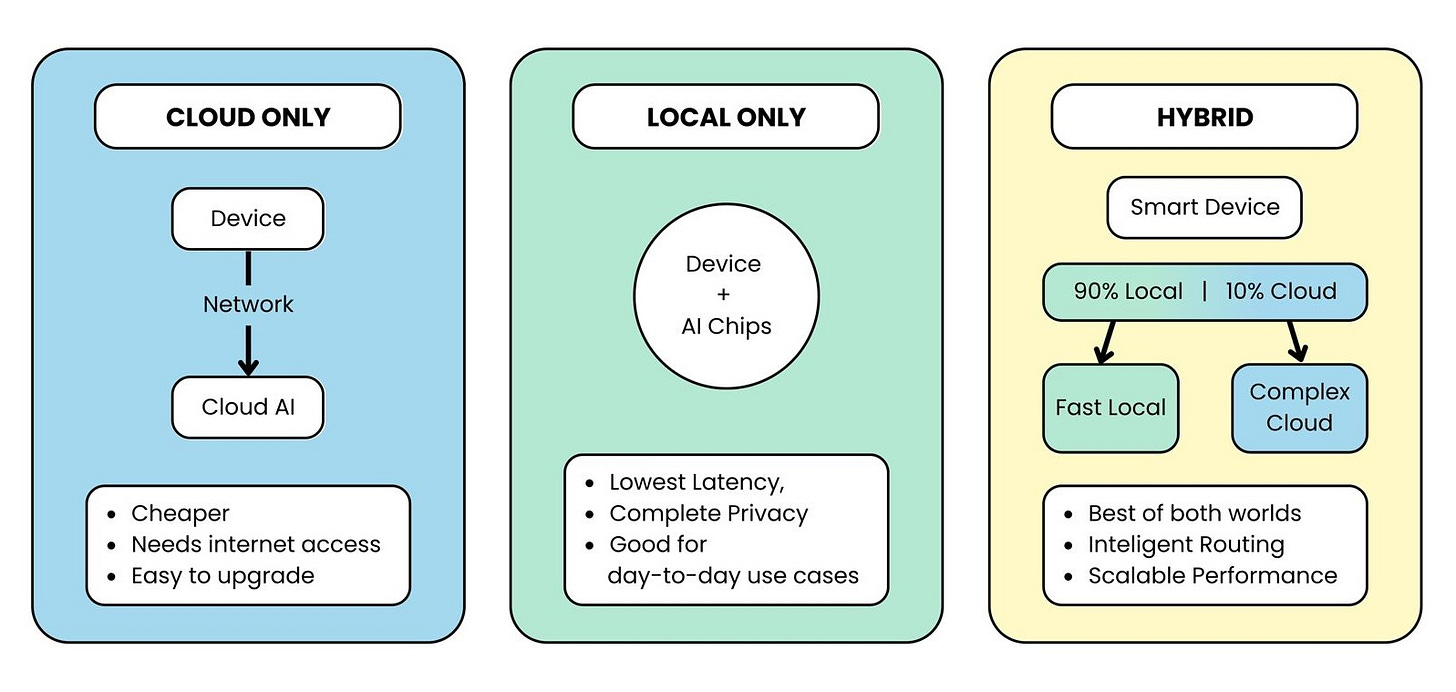

Yet while models are shrinking in size, scaling laws aren't going anywhere. There will still be value in pushing large, centralized models to new heights. What's changing is how and where those models are used. We see three clear archetypes emerging in Physical AI deployment:

Fig: Three deployment models for AI workloads: Cloud Only, Local Only, and Hybrid approaches, each with their own advantages and use cases.

Source: FPX AI

What This Means for the Infrastructure Market

Serving these hybrid and cloud-first models is where Neoclouds have the edge.

Gone is the model of a single hyperscaler tenant consuming 80% of your datacenter. The new era demands multi-client, multi-hardware flexibility—supporting dozens of AI-native customers, each with their own architecture preferences, latency needs, and usage spikes.

The operators who thrive will be the ones who:

Design for shorter, bursty inference jobs, not just long training runs

Offer hardware optionality, not just NVIDIA GPUs—some clients will want AMD, FPGAs, CPUs, or future inference-specific ASICs

Build low-latency, urban-proximate datacenters near major metros, where Physical AI agents live and act

That means infrastructure is becoming a demand-shaping problem, not just a supply problem. You're no longer building the biggest possible box—you're building the right box in the right place, with the right routing intelligence.

This is where firms like ours are focused. We help colocation providers identify the best inference-grade locations, advise Neoclouds on how to think about next-gen data center design, and help them build teams that can support not just large GPU clusters, but also hybrid workloads, robotic deployments, and edge-cloud architectures.

The future of AI workloads isn't just about model size. It's about distribution, specialization, and responsiveness. And the datacenter strategy that wins will be the one designed to match.

The AI Infrastructure Shift: How to Win if You Build, Fund, or Operate It

For Datacenter Operators: Verticalize, Specialize, and Move Fast

If you do not have any existing large scale training client or the scale that large neoclouds like CoreWeave or Crusoe do, chasing large-scale training clients is a losing game. The better path is to go vertical: pick a high-value niche—robotics, vision QA, healthcare AI—and build around it. That means low-latency colocation sites near major metros, ideally on diverse fiber paths for minimal RTT. If you're offering bare metal, make sure it's production-ready: full observability, Slurm or Kubernetes orchestration, flexible and transparent SLAs.

Differentiate with real benchmarks—training vs. inference, single-node vs. multi-node—and support hardware isolation (MIG, SR-IOV, DPUs) to ensure multi-tenant stability. Add compliance features buyers care about—HIPAA, PCI, data residency—and be ready to answer questions in $/task, not just $/GPU.

Your edge won't come from raw silicon—it'll come from the software stack and services you wrap around it. Either build or partner to deliver tooling that solves real user problems. Talk to buyers about their workloads and help them pick the right hardware for their task, not just the most expensive. Use marketplaces to monetize excess capacity, and staff teams who understand the space from first principles—because in an environment where new chips and architectures emerge constantly, the first operators who adapt will win the margin.

FPX Consulting works with Neoclouds to help guide you through exactly what buyers are looking for—from hardware procurement and colo expansion to identifying the right sites and building out your stack. We'd love to help.

For Colocation & Power Developers: Designing Datacenter Portfolios That Sell

The most valuable asset in today's datacenter market isn't just power—it's power that's actually deliverable today. For colocation operators, site selection is no longer just about future power expansion or land area—it's about how quickly you can stand up compute that generates revenue. The highest premiums today go to sites that offer immediate, energizable power, diverse long-haul fiber access, water rights that future-proof cooling, and near a tier 1 or 2 Metro to get easy access to talent.

Think of your portfolio in tiers. Your Tier A sites should be metro-adjacent with energization timelines under 12 months. These are your go-to locations inference hubs 15-50MW sites that serve latency-sensitive clients. Your Tier B sites should have substation pads poured, transmission interconnects in motion, and a clear path to 50–200 MW over 24–36 months. These campuses become your long-term scale plays—especially if they're near robotics, biotech, or AI manufacturing hubs.

Great sites combine strong shells and physical security with dual utility feeds or ring bus potential, true fiber diversity (not shared ROW), access to reclaimed or permitted water, and zoning that enables fast-track development. Great operators match this with conversion-ready buildouts—flexible power distribution, busways, and cooling that allow rapid swings between training and inference workloads.

As more colocation supply comes online, standing out will require more than just MW and square footage. Partnering with operators or platforms that offer true specialization and IP—like Colovore, who deliver high power and cooling density per rack and support diverse hardware types beyond just NVIDIA—can give your portfolio an edge. Tenants are becoming more sophisticated, and differentiated technical capabilities will drive faster absorption and longer-term value.

At FPX, we help operators and investors design and build high-performance portfolios across Tier A and Tier B assets. We also assess, validate, and help market existing sites—whether to Hyperscalers, Neoclouds, or specialized buyers looking for GPU-ready infrastructure. If you're siting a new facility or repositioning an old one, we'd love to help.

For Investors: Finding the Edge in an Evolving AI Infra Market

If you're an investor financing datacenters or colocation projects, the next decade of returns won't come from chasing hyperscaler training deals—they'll come from backing operators who know how to monetize specialized, low-latency, hybrid inference. The winners will be teams that think from first principles, operate metro-adjacent sites with real power and fiber, and deploy workload-driven infrastructure, not just racks of GPUs.

The biggest opportunities right now are often hidden in distress—stranded campuses with undeliverable power, failed single-tenant plays, or long-lead substation delays that can be converted into multi-tenant inference hubs with the right upgrades. Your portfolio should combine Tier A revenue sites (energization <12 months, 200–500kW pods, heterogeneous-ready) with Tier B growth assets (substation pads poured, water secured, GIAs in motion).

Red flags are everywhere: fake fiber diversity, "paper megawatts," and operators that can't benchmark $/task or latency.

FPX Consulting works directly with investors to source off-market deals, conduct deep power and fiber due diligence, and help structure portfolios that reflect where AI infrastructure is actually headed—not where it used to be. We'd love to help.